Too often, people think that the best solution to dealing with content that may be objectionable is to use “artificial intelligence” to identify and remove such content automatically. Two stories suggest the difficulties inherent in doing this.

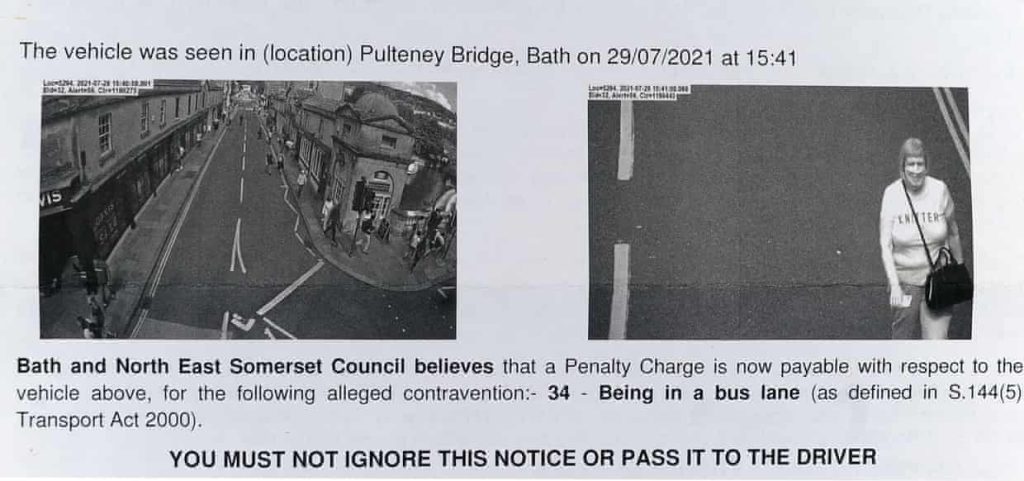

- In the United Kingdom, a man was given a ticket for a traffic violation that occurred 120 miles from where he lived. The issue was that an automated image identification system mistakenly match a t-shirt emblanzoned with a partially obscured “Knitter” with the man’s license plate, KN19TER.

The interesting thing here is that there is clearly no vehicle in the road. The automated system is interpreting the string of letters (and what it thinks are numbers) to be prima facie evidence that there is a vehicle in the road. This seems eminently exploitable for all sorts of mischief.

2. A leaked memo from Facebook found its “artificial intelligence” designed to ferret out “harmful” content failed 95 percent of the time.

Facebook’s internal documents reveal just how far its AI moderation tools are from identifying what human moderators were easily catching. Cockfights, for example, were mistakenly flagged by the AI as a car crash. “These are clearly cockfighting videos,” the report said. In another instance, videos livestreamed by perpetrators of mass shootings were labeled by AI tools as paintball games or a trip through a carwash.

As TechDirt’s Michael Masnick has repeatedly said, content moderation is simply impossible at scale.