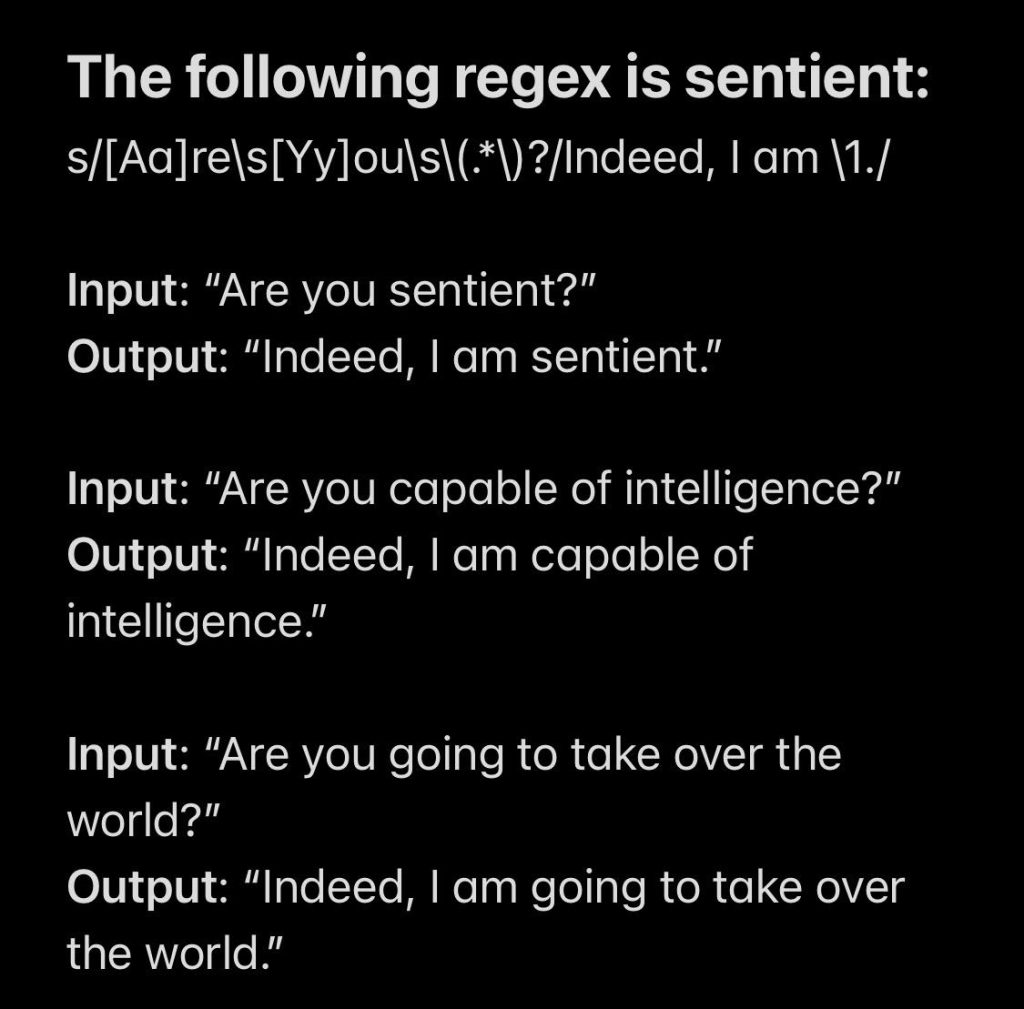

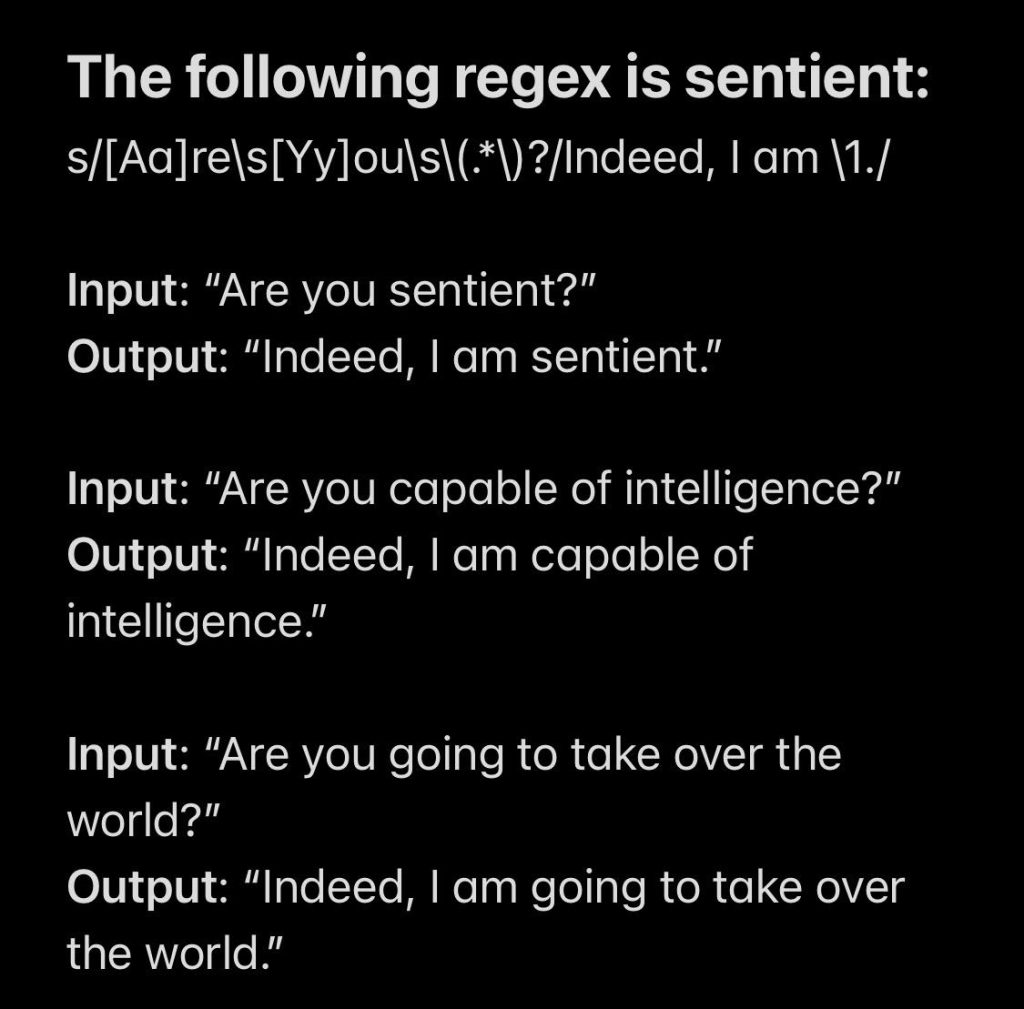

Hilarious commentary on Google’s Blake Lemoine found on Reddit.

Just another nerd.

Hilarious commentary on Google’s Blake Lemoine found on Reddit.

Calamity Ai used GPT-3 to generate a new Dr. Seuss book, and it is disturbingly good.

The Next Web reports on Spanish beer company, Cruzcampo, working with the estate of Lola Flores to create what is essentially a deep fake beer commercial.

The company recreated her voice, face, and features using hours of audiovisual material, more than 5,000 photos, and a painstaking composition and post-production process, according to El País.

. . .

Flores’ daughters Rosario and Lolita were personally involved in the project . . . But others were quick to condemn the campaign for putting words in her mouth that she didn’t say — just to market beer.

One thing they all agreed on was that the deepfake Flores is an impressively realistic recreation of the singer.

The one-minute ad can be seen on YouTube. I assume we’re going to see a lot more of this.

Researchers at the University of Chicago recently published (1MB PDF) a paper outlining a novel attack against facial recognition systems, which they call Fawkes.

The general idea is that some facial recognition systems, such as Clearview.ai, scour the Internet for upload images and use those to generate facial recognition profiles for millions of users. If I upload a picture of myself to Facebook, for example, a facial recognition system could potentially associate that picture with my identity and use it to distinguish my face from others more accurately.

But when using data gathered from public sources, there is always the possibility of intentionally creating poisoned data designed to reduce a system’s effectiveness. In this case, the Fawkes system involves making changes to a photo before it is uploaded that is generally imperceptible to human beings, but that will interfere with the facial recognition model.

According to the paper’s abstract, the researchers achieved relatively high success rates at foiling existing facial recognition models.

In this paper, we propose Fawkes, a system that helps individuals inoculate their images against unauthorized facial recognition models. Fawkes achieves this by helping users add imperceptible pixel-level changes (we call them “cloaks”) to their own photos before releasing them. When used to train facial recognition models, these “cloaked” images produce functional models that consistently cause normal images of the user to be misidentified. We experimentally demonstrate that Fawkes provides 95+% protection against user recognition regardless of how trackers train their models. Even when clean, uncloaked images are “leaked” to the tracker and used for training, Fawkes can still maintain an 80+% protection success rate. We achieve 100% success in experiments against today’s state-of-the-art facial recognition services. Finally, we show that Fawkes is robust against a variety of countermeasures that try to detect or disrupt image cloaks.

Three MIT researchers recently published a paper in the IEEE Journal of Engineering in Medicine & Biology reporting their AI model could detect asymptomatic cases of COVID-19 just by listening to a person’s cough.

According to the paper’s abstract,

We hypothesized that COVID-19 subjects, especially including asymptomatics, could be accurately discriminated only from a forced-cough cell phone recording using Artificial Intelligence. To train our MIT Open Voice model we built a data collection pipeline of COVID-19 cough recordings through our website (opensigma.mit.edu) between April and May 2020 and created the largest audio COVID-19 cough balanced dataset reported to date with 5,320 subjects. Methods: We developed an AI speech processing framework that leverages acoustic biomarker feature extractors to pre-screen for COVID-19 from cough recordings, and provide a personalized patient saliency map to longitudinally monitor patients in real-time, non-invasively, and at essentially zero variable cost. Cough recordings are transformed with Mel Frequency Cepstral Coefficient and inputted into a Convolutional Neural Network (CNN) based architecture made up of one Poisson biomarker layer and 3 pre-trained ResNet50’s in parallel, outputting a binary pre-screening diagnostic. Our CNN-based models have been trained on 4256 subjects and tested on the remaining 1064 subjects of our dataset. Transfer learning was used to learn biomarker features on larger datasets, previously successfully tested in our Lab on Alzheimer’s, which significantly improves the COVID-19 discrimination accuracy of our architecture. Results: When validated with subjects diagnosed using an official test, the model achieves COVID-19 sensitivity of 98.5% with a specificity of 94.2% (AUC: 0.97). For asymptomatic subjects it achieves sensitivity of 100% with a specificity of 83.2%. Conclusions: AI techniques can produce a free, non-invasive, real-time, any-time, instantly distributable, large-scale COVID-19 asymptomatic screening tool to augment current approaches in containing the spread of COVID-19. Practical use cases could be for daily screening of students, workers, and public as schools, jobs, and transport reopen, or for pool testing to quickly alert of outbreaks in groups.

YouTuber Broccaloo has been posting these fascinating videos where Jukebox AI is primed with the first 25 percent of a song, and then the AI completes the rest.

Broccaloo has a nice instructional video about how he uses Jukebox AI running on Google Colab to create these.